Beam Me Up, Alexa: Digital Assistants Hacked By Lasers

Lasers Mimicking Voice Commands Can Open Doors

It’s a laser-focused hack. Literally.

See Also: The Cybersecurity Swiss Army Knife for Info Guardians: ISO/IEC 27001

Voice-controlled assistants such as Amazon’s Alexa, Apple’s Siri and Google’s Assistant can be tricked into executing commands by precisely directing a laser beam at a device’s microphone, researchers revealed in a paper published Monday.

The surprising findings mean an intruder could potentially unlock doors, open garage doors and make purchases online by manipulating a targeted device, adding a new dimension of security and privacy issues to home assistants.

The research comes from the University of Electro-Communications in Japan and the University of Michigan.

The vulnerability is inherent within microphones in the digital assistants. Microphones use diaphragms - thin, flexible membranes that vibrate in response to sound waves, such as a voice. Those sound waves are translated into electrical signals, which are then interpreted as a command.

But diaphragms will also wobble when struck by laser light. The researchers fine-tuned light beams that would correspond to voice commands. They contend that authentication of voice commands is either lacking or non-existent on some devices, enabling a remote attack.

“Light can easily travel long distances, limiting the attacker only in the ability to focus and aim the laser beam,” the researchers write on a website, Light Commands, summarizing the research.

Since the research poses immediate risks, the findings were shared prior to the release of the study with Google; Amazon; Apple; August, which makes smart locks; Ford; Tesla; and Analog Devices, a manufacturer of small, high-performance microelectrical-mechanical system, or MEMS, microphones. The team has also notified ICS-CERT.

Efforts to reach some of the companies named were not successful. The New York Times reported they were all studying the conclusions. A Google spokesperson says "we are closely reviewing this research paper. Protecting our users is paramount, and we're always looking at ways to improve the security of our devices."

Low Power, High Effectiveness

To test the devices, the researchers recorded four baseline commands, including “What time is it?” and “Set the volume to zero,” including the wake word – the trigger for a digital assistant.

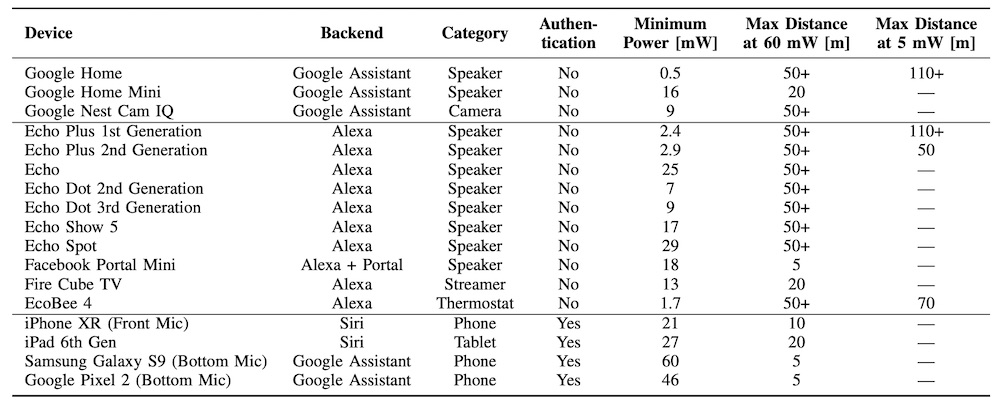

The effectiveness of a remote hijack depends on the type of device that is targeted. A low-power laser pointer, around 5 milliwatts (one milliwatt is one-thousandth of a watt) is enough to gain control of Alexa and Google Smart Home devices, the researchers determined. But a beam of 60 mW is necessary for phones and tablets and most other devices.

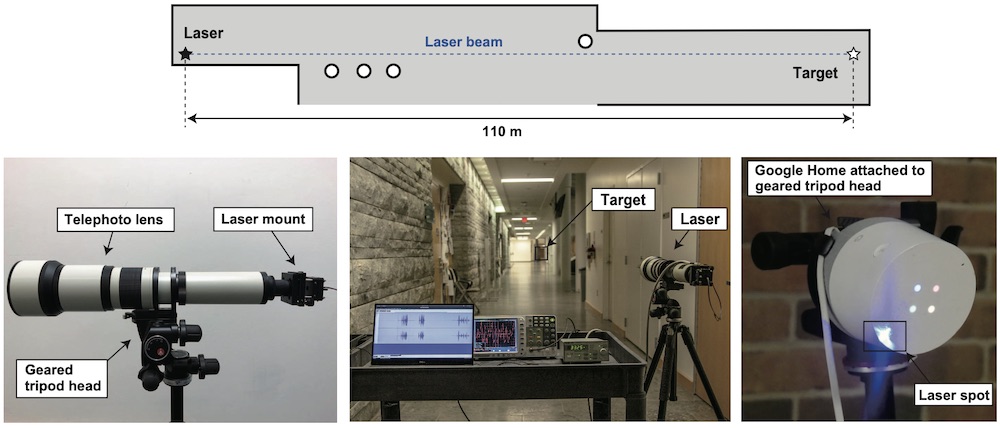

The equipment needed for an attack is cheap. A laser pointer; a laser diode driver, which supplies the current to the light source; a telephoto lens; and a sound amplifier can be purchased for a total of less than $600, the researchers write.

An attack is dependent on unhindered line of sight to a targeted device, but it will work through closed windows, according to the researchers. One drawback is that a laser must be focused right at the microphone port. But one workaround is to use a larger laser spot size, such as a commercially-available 4,000 mW laser engraver. To extend the attack range, a telephoto lens can be used to focus the beam, with the operator utilizing binoculars to aim, the researchers write.

There’s a risk an owner might simply notice a laser beam. To work around this, the researchers experimented with infrared light, which is invisible to the eye. They successfully injected commands into a Google Home from about a foot away using a Lilly Electronics 30 mW module with a 980-nm infrared laser. The power was subdued using a Thorlabs LDC205C driver, which brought the power down to 5 mW.

“The spot created by the infrared laser was barely visible using the phone camera, and completely invisible to the human eye,” they write. Further experiments weren’t carried out, however, because powerful infrared beams could cause eye damage.

Weak Authentication

Digital assistants use speech recognition technology, but its typically limited to content personalization rather than authentication. With Google and Apple, devices will recognize the wake word but not use voice recognition to authenticate the rest of the command, the researchers write.

Google’s Home and Alexa block voice purchases by unrecognized voices, but allow “previously unheard voices to execute security critical voice commands, such as unlocking doors,” the researchers write.

The story for phones and tablets is somewhat better. The Siri and Alexa apps can only be used if a device is unlocked. But devices without an input mechanism will rely on a PIN relayed by voice. That PIN could be vulnerable to eavesdropping or brute force, they write.

“We also observed incorrect implementation of PIN verification mechanisms,” according to the paper. “While Alexa naturally supports PIN authentication (limiting the user to three wrong attempts before requiring interaction with a phone application), Google Assistant delegates PIN authentication to third-party device vendors that often lack security experience.”

Longer term, manufacturers could develop both software and hardware changes to detect light-based command injection attempts. That could include asking a user a randomized question before a session begins. Also, digital assistants often have more than one microphone, which should all receive the same input from an authorized user. Simply better protecting the microphone diaphragm from light could also be an improvement.